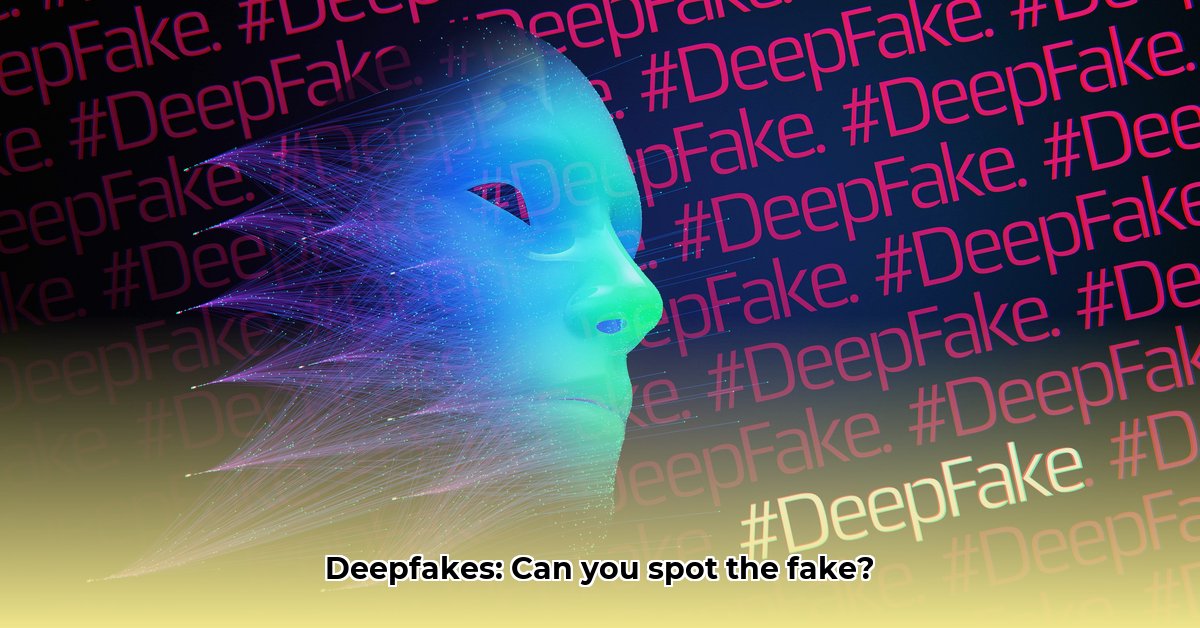

Imagine a world where realistic-looking fake videos and audio of anyone doing anything are commonplace. This is the reality of deepfakes, impacting elections, spreading misinformation, damaging reputations, and threatening national security. Let’s explore this escalating threat and the multifaceted strategies required to combat it.

Deepfake Technology: The Looming Crisis for Truth and Media

The rapid rise of deepfake technology, fueled by advancements in artificial intelligence (AI), presents a significant and evolving threat to truth, trust, and media integrity. These convincingly realistic fake videos and audio recordings can deceive even the most discerning individuals. This digital manipulation threatens democratic processes, personal relationships, business operations, and international relations. Understanding the nuances of AI-driven deception is crucial for safeguarding information ecosystems, making the exploration of AI-driven media manipulation essential for media consumers and producers alike. The sophistication of deepfakes necessitates a proactive and comprehensive approach to detection and mitigation.

Unmasking the Magic (or the Menace) of Deepfakes

Deepfakes utilize sophisticated AI algorithms, often trained on vast amounts of images, audio, and video data of a person, to convincingly mimic their face, voice, and mannerisms. This allows for the generation of fabricated content that appears genuine, often indistinguishable from reality to the untrained eye. The technology is becoming increasingly accessible, with user-friendly software democratizing its creation. However, the development of accessible AI manipulation tools has democratized creation but at the cost of an increased risk of misuse, demanding greater vigilance and robust countermeasures.

The Real-World Impact: Beyond the Hype

The potential consequences of deepfakes are far-reaching and devastating, including spreading misinformation, influencing elections, committing fraud, manipulating financial markets, and eroding trust in media and institutions. The risk extends to personal lives, business operations, and national security, with the potential to incite violence, damage reputations, and disrupt diplomatic relations. A single, convincingly rendered fabrication can have significant, widespread, and long-lasting implications. The rise in election interference via deepfakes and their potential impact on societal trust present a clear and present danger, requiring immediate action to safeguard democratic processes.

Spotting the Imposters: The Never-Ending Game of Cat and Mouse

Detecting deepfakes involves identifying subtle flaws and inconsistencies like unnatural blinking, inconsistent lighting, distorted audio, or unusual background elements. However, deepfake creators are constantly improving their techniques, leveraging ever-more-sophisticated AI models to eliminate these telltale signs, making detection increasingly challenging. This ongoing technological tug-of-war requires constant innovation in detection methods, as well as a healthy dose of skepticism. The ongoing refinement of deepfake forensic analysis shows the complexity of staying ahead of the curve in detecting sophisticated forgeries, highlighting the need for continuous research and development in this field.

Fighting Back: A Multi-Front Battle

Combating deepfakes requires a comprehensive and coordinated approach involving technological countermeasures, legal frameworks, public education, and international collaboration:

- Technological Countermeasures: Developing advanced deepfake detection software that analyzes videos and audio with a high degree of accuracy, leveraging AI to fight AI. Scientists are working to improve proprietary algorithms that can identify the subtle inconsistencies of deepfake content, including facial anomalies, audio distortions, and contextual discrepancies. This evolving tech is a crucial area of focus, requiring significant investment and continuous improvement.

- Strengthening the Legal Framework: Establishing clear and enforceable legal frameworks to address the misuse of deepfakes, including provisions for accountability and penalties, while carefully protecting freedom of speech and ensuring due process. This requires balancing the need to deter malicious use with the fundamental right to express oneself freely.

- Empowering the Public with Media Literacy: Educating the public to think critically about online information, spot red flags, and verify sources before sharing content. Questioning claims, checking multiple sources, and understanding the techniques used to create deepfakes are crucial skills in the digital age.

- Collaboration and Information Sharing: Tech companies, researchers, governments, media organizations, and international bodies must collaborate, share information, and agree on standards for deepfake detection and mitigation. This includes sharing datasets, best practices, and research findings to accelerate the development of effective countermeasures.

- Developing Immutable Authentication Trails: Implementing systems that allow individuals and organizations to create verifiable records of their activities, making it more difficult to create convincing deepfakes that misrepresent their actions or statements. This could involve the use of blockchain technology or other secure methods of authentication.

Assessing the Risks: Who’s Most Vulnerable?

Deepfakes pose different levels of risk to different sectors, with some sectors being more vulnerable than others due to the nature of their operations or the potential impact of a successful deepfake attack.

| Sector | Likelihood of Deepfake Attack | Impact of a Successful Attack | Overall Risk | Examples of Potential Attacks |

|---|---|---|---|---|

| Politics | Very High | Extremely High | Extremely High | Deepfakes used to spread misinformation about candidates, influence elections, or incite political violence. |

| Media | Very High | High | Very High | Deepfakes used to create fake news stories, damage the reputation of journalists, or undermine public trust in the media. |

| Business | High | High | High | Deepfakes used to impersonate executives, manipulate financial markets, or damage the reputation of a company. |

| Personal Relationships | Medium | Medium | Medium | Deepfakes used to create fake intimate videos, spread rumors, or damage personal relationships. |

| National Security | Very High | Extremely High | Extremely High | Deepfakes used to spread disinformation, incite international conflict, or undermine national security interests. |

| Critical Infrastructure | Medium | Extremely High | High | Deepfakes used to launch social engineering attacks against critical infrastructure personnel, potentially leading to disruptions or catastrophic failures. |

| Financial Institutions | High | Very High | High | Deepfakes used to commit fraud, manipulate financial markets, or launder money. |

| Healthcare | Medium | High | Medium | Deepfakes used to spread misinformation about medical treatments, damage the reputation of healthcare professionals, or compromise patient privacy. |

| Education | Low | Medium | Low | Deepfakes used to harass students or faculty, create fake academic records, or spread misinformation about educational institutions. |

This risk assessment evolves with technology advancements, requiring continuous monitoring and adaptation of mitigation strategies.

The Road Ahead: A Collective Responsibility

Deepfakes pose a serious and escalating threat to truth, trust, and media. Immediate and sustained action is crucial to protect information ecosystems, democratic processes, and national security. Collective responsibility, informed caution, and proactive measures are essential in navigating this complex and evolving landscape.

How to Detect Sophisticated Deepfake Videos Using AI Detection Tools

The increasing levels of sophistication of deepfakes means that society needs to arm itself with the ability to detect these forgeries, utilizing advanced AI detection tools and fostering critical thinking skills.

Key Takeaways:

- Deepfake detection is a critical field for combating misinformation and protecting against malicious actors.

- AI-powered tools offer promising solutions but face challenges in accuracy, reliability, and scalability.

- Standardized testing methodologies and datasets are needed for objective comparison and evaluation of detection tools.

- Ethical concerns about privacy, bias, and potential misuse require careful consideration.

- Collaboration between researchers, developers, policymakers, and the public is essential to address this growing threat.

The Deepfake Arms Race: A Technological Tug-of-War

The sophistication of deepfakes, created using artificial intelligence, threatens the truthfulness of information and necessitates a constant arms race in the development of effective detection methods. The growing demand for robust AI forgery detection highlights the intense need for advancements in defensive technology as deepfakes become more convincing and harder to detect.

Detecting Deepfakes: The Power of AI

AI is increasingly being used to combat AI-generated fakery, with AI-powered detection tools employing sophisticated algorithms like CNNs (Convolutional Neural Networks – AI systems that process and analyze visual data), RNNs (Recurrent Neural Networks – AI systems identify patterns in sequences), and hybrid models for improved accuracy and efficiency. These tools analyze various features of videos and audio, including facial expressions, lip movements, audio distortions, and contextual inconsistencies, to identify potential deepfakes. The limitations of these systems mean that deepfake creators are often one step ahead, constantly evolving their techniques to evade detection.

Challenges and Limitations: The Need for Standardization

A lack of standardized testing methodologies and publicly available datasets hinders fair comparisons and objective evaluation of deepfake detection tools. Researchers must establish common datasets, evaluation metrics, and testing protocols to ensure that detection tools are accurate, reliable, and scalable. Ethical implications, such as biased outcomes, privacy infringement, and the potential for misuse of detection tools, are substantial and require careful consideration.

A Multi-faceted Approach: Beyond the Technology

Effective deepfake detection demands a multi-pronged approach that goes beyond technology and encompasses education, policy, and collaboration:

- Education and Awareness: Teaching individuals to critically evaluate online information, spot red flags, and verify sources before sharing content. Spotting clues like unnatural blinking, inconsistent lighting, distorted audio, and unusual background elements improves detection and helps to prevent the spread of misinformation.

- Improved Tool Development: Refining AI detection tools, focusing on hybrid models, addressing vulnerabilities, and improving accuracy and scalability. This requires continuous research and development, as well as collaboration between researchers and developers.

- Robust Verification Processes: News organizations and social media platforms need stringent processes to verify videos and audio content before publishing or sharing it, including fact-checking, source verification, and the use of deepfake detection tools.

- Policy and Regulation: Governments must consider the ethical and legal implications of deepfakes and develop regulations balancing free speech with combating malicious content. Regulations should address the creation, distribution, and use of deepfakes, as well as the potential for bias, privacy infringement, and misuse of detection tools.

- Collaborative Efforts: Sharing data, practices, and research enhances detection effectiveness. This includes sharing datasets of deepfake and real videos, best practices for detection and mitigation, and research findings on the latest deepfake techniques.

The Path Forward: A Collaborative Effort

The collaborative nature of progress means that researchers, tech developers, policymakers, and the public must cooperate for the future of media and information integrity. This requires open communication, shared resources, and a commitment to developing effective and ethical solutions to the deepfake challenge.

Deepfake Detection in Social Media: Combating Misinformation and Protecting Users

The rising tide of deepfakes needs to be met with a corresponding rise in prevention and proactive measures to protect our sources of information and combat the spread of misinformation on social media platforms.

Key Takeaways:

- Deepfake technology poses a significant and growing threat to online information integrity and the trustworthiness of social media platforms.

- A multifaceted approach is crucial, involving technological solutions, policy measures, public education, and collaborative efforts.

- Current detection methods are improving but face challenges in accuracy, scalability, and adaptability.

- Collaboration between social media platforms, researchers, policymakers, and the public is essential to address this complex problem.

- Individuals need to enhance critical thinking skills, media literacy, and awareness of deepfake techniques to avoid falling victim to misinformation.

The Deepfake Deluge: A New Era of Deception

Hyperrealistic synthetic media blurs the lines between reality and fiction, creating a challenging environment where it is increasingly difficult to distinguish between authentic and fabricated content. Deepfake detection is a necessity in this treacherous information landscape, requiring constant vigilance and a proactive approach to combating misinformation.

How Deepfakes Work: The Illusion of Authenticity

Deepfakes exploit powerful artificial intelligence (AI) algorithms that learn from vast datasets to create convincing fakes. These algorithms analyze and synthesize images, audio, and video data to generate realistic synthetic content that can be used to impersonate individuals, spread false information, or manipulate public opinion.

The Multimodal Challenge: Beyond the Video Clip

The challenge is not only in videos, but also audio and text used to spread misinformation, complicating detection efforts. Fighting audio and text-based disinformation strategies is imperative to counteract the increasing creativity of fraudulent content and protect against the spread of misinformation through multiple channels.

Technological Responses: An Ongoing Arms Race

Researchers are developing detection techniques involving advanced AI algorithms, including deep learning models, neural networks, and forensic analysis tools. These techniques analyze various features of videos and audio, such as facial expressions, lip movements, audio distortions, and contextual inconsistencies, to identify potential deepfakes. As deepfake generation improves, detection methods must adapt and evolve to stay ahead of the curve.

The Human Element: Media Literacy and Critical Thinking

Education and critical thinking are essential in combating deepfakes and protecting against misinformation. Enhancing media literacy empowers individuals to evaluate information critically, question claims, and verify sources before sharing content. This includes teaching people how to identify common deepfake techniques, recognize biases, and assess the credibility of online sources.

A Collaborative Effort: The Way Forward

Combating deepfakes requires a unified front involving social media platforms, researchers, policymakers, and the public. Social media platforms must invest in content moderation, develop robust detection tools, and promote media literacy among their users. Governments need clear legal frameworks to address the creation and distribution of deepfakes, while protecting freedom of speech. Researchers must continue to develop and improve detection techniques, and the public must be educated about the risks of deepfakes and the importance of critical thinking.

Looking Ahead: Navigating Uncertain Waters

The future of information integrity depends on technological innovation, proactive policies, and empowered citizens. By working together, we can navigate the uncertain waters of the deepfake era and protect against the spread of misinformation.

Deepfake Technology and National Security: Assessing Risks and Developing Mitigation Strategies

The threat to truth and media is only amplified by the potential for a severe erosion of national security, requiring a comprehensive and coordinated approach to assess risks and develop effective mitigation strategies.

Key Takeaways:

- Deepfakes pose a growing and increasingly sophisticated threat to national security, with the potential to undermine trust, sow discord, and incite violence.

- Potential misuse in disinformation campaigns, propaganda efforts, and social engineering attacks is alarming and requires immediate attention.

- Current detection methods have limitations and cannot reliably identify all deepfakes, making it crucial to develop more advanced and robust detection tools.

- A multi-pronged approach is needed, involving technological advancements, legal frameworks, public education, international collaboration, and intelligence gathering.

- International collaboration is crucial to share information, coordinate efforts, and develop common standards for combating deepfakes.

The Evolving Threat Landscape

Deepfake technology creates realistic fabricated content, posing a significant and evolving threat to national security that requires continuous monitoring and adaptation of mitigation strategies. The rise in nation-state sponsored deepfake campaigns intensifies the existing threats and requires immediate and well-coordinated responses involving governments, tech companies, and international organizations.

Disinformation and Deception

Malicious actors leverage deepfakes to spread disinformation, sow discord, and undermine trust in institutions and governments. This can be used to manipulate public opinion, incite violence, and disrupt diplomatic relations.

Vulnerable Infrastructure

Critical infrastructure is vulnerable to deepfake-driven social engineering attacks, potentially causing societal collapse and disrupting essential services. This includes attacks targeting critical infrastructure personnel, such as power plant operators, transportation workers, and government officials.

The Arms Race: Detection vs. Creation

New methods for creating deepfakes constantly emerge, outpacing defensive technologies and requiring continuous innovation in detection methods. This ongoing arms race necessitates a sustained commitment to research and development, as well as collaboration between researchers, developers, and policymakers.

Mitigation Strategies: A Multifaceted Approach

- Technological Advancements: Improved deepfake detection is paramount, requiring continuous investment in research and development of advanced detection tools and techniques. This includes developing AI-powered detection tools, forensic analysis methods, and authentication technologies.

- Legal Frameworks: Stronger laws are needed to criminalize malicious use of deepfakes, including provisions for accountability and penalties. This requires balancing the need to deter malicious use with the fundamental right to express oneself freely.

- Public Education: Widespread media literacy is crucial to empower individuals to critically evaluate online information, spot red flags, and verify sources before sharing content. This includes teaching people how to identify common deepfake techniques, recognize biases, and assess the credibility of online sources.

- International Collaboration: Shared intelligence and coordinated efforts are indispensable to combat the global threat of deepfakes. This includes sharing data, best practices, and research findings, as well as coordinating law enforcement efforts and developing common standards for deepfake detection and mitigation.

- Intelligence Gathering: Gathering intelligence on the actors creating and using deepfakes is essential to disrupt their operations and prevent future attacks. This requires investing in human intelligence, signals intelligence, and cyber intelligence capabilities.

The Role of Governments and Tech Companies

Governments and tech companies must collaborate, invest in research, develop strategies, and improve content moderation to combat the threat of deepfakes to national security. This includes sharing data, developing detection tools, and promoting media literacy, as well as coordinating law enforcement efforts and developing legal frameworks to address the malicious use of deepfakes.